Open source chat projects based on Vercel and GPT api recommendation

In the past year, I have tried a lot of web apps based on Vercel and GPT api (also including Gemini,Claude,Qwen,and so on).

They are helpful for productivity and research missions.

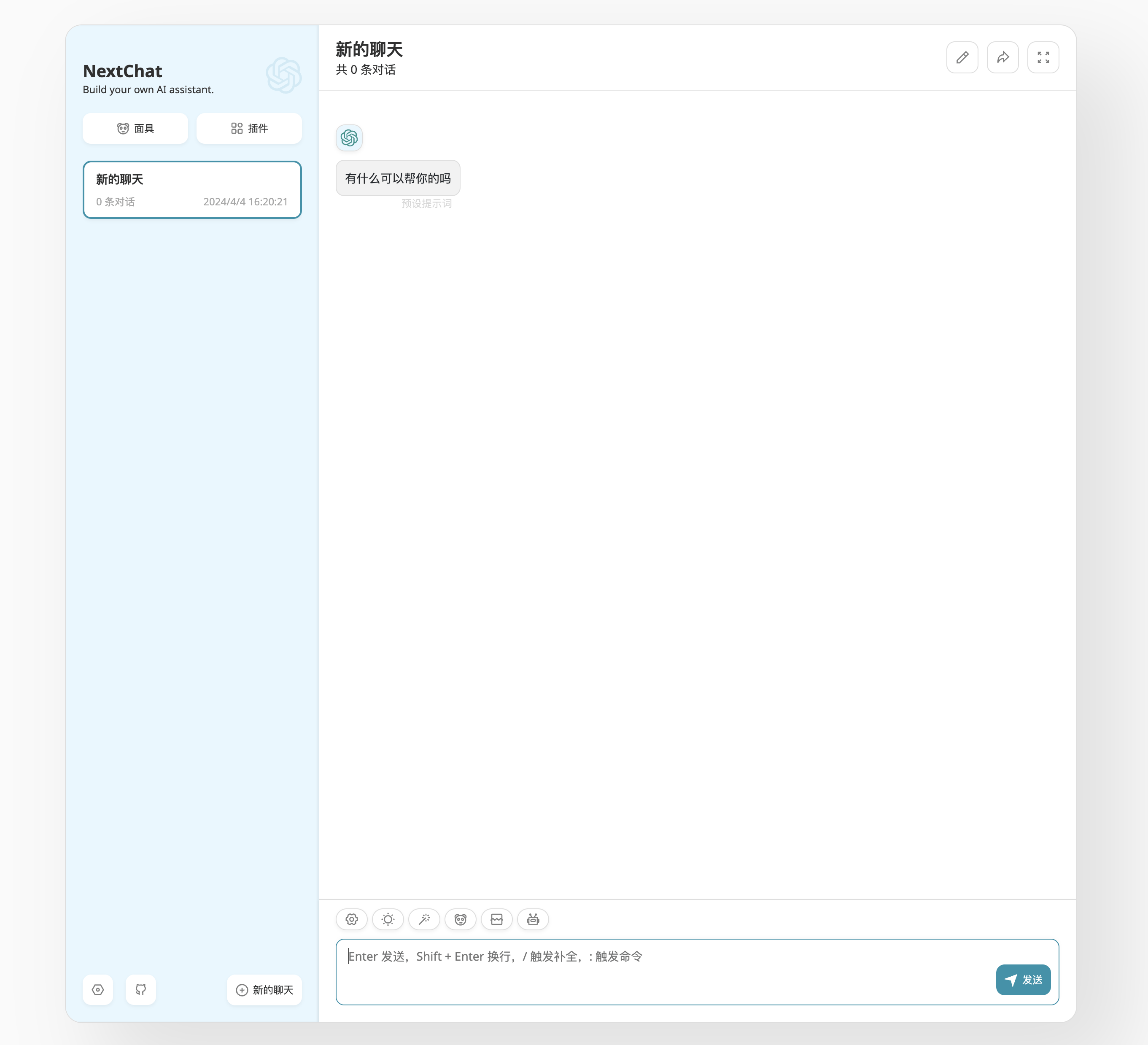

1.ChatGPT-next-web

1.1 Introduction

Undoubtedly, this open-source project is nearly the most famous ai chat project in Github.Presently, it has 66.3K stars and 54.1 forks (better than most of open source projects on github! ).This project support GPT 3,GPT4 & Gemini Pro.Of course, any other LLMs that support OpenAI's API format are also utilizable.

Features:

Deploy for free with one-click on vercel in under 1minute

Compact client (~5MB) on Linux/Windows/Macos (thanks tauri)

Fully compatible with self-deployed LLMs

Privacy first, all data is stored locally in the browser

Markdown support: LaTex,mermaid,code hightlight,etc.

Responsive design, dark mode and PWA

Fast first screen loading speed ( ~100kb), support streaming response

Automatically compresses that history to support long conversations while also saving your tokens

Well, the reasons for the projects immense popularity can be attributed to two primary factors:

Firstly, the interface of chatgpt-next-web is designed with a clean and aesthetically pleasing layout.Secondly, the deployment process is remarkabley convenient and user-friendly. With an easy-to-follow README document,everyone can build their own web app within 10 minutes.

1.2 Development process

1.2.1 Vercel (The most recommended method)

Get OpenAI API key ( this step doesn't require me to remind, does it? )

Go to this project's address and click vercel's Deploy, then set environment variables

CODE ( optional ) : access password, if you don't set it, your token will be exploited by hackers

OPENAI_API_KEY ( required )

BASE_URL ( optinal ): people like me may could't have ability that directly access to OpenAI's API server, so we need this proxy API.

GOOGLE_API_KEY ( optional ): api for Gemini

GOOGLE_URL (optional ): like BASE_URL, but for google

HIDE_USER_API_KEY (optional): if you don't want users to input their own API key, set this value to 1

DISABLE_GPT 4 (optinal): if you do not want users to use GPT-4, set this value to 1.

CUSTOM_MODELS (optional):

Default: Empty Example:

+llama,+claude-2,-gpt-3.5-turbo,gpt-4-1106-preview=gpt-4-turbo means addllama, claude-2 to model list, and removegpt-3.5-turbo from list, and displaygpt-4-1106-preview asgpt-4-turbo.To control custom models, use

+ to add a custom model, use- to hide a model, usename=displayName to customize model name, separated by comma.User

-all to disable all default models,+all to enable all default models.

1.2.2 Docker

docker pull yidadaa/chatgpt-next-web

docker run -d -p 3000:3000 \

-e OPENAI_API_KEY=sk-xxxx \

-e CODE=your-password \

yidadaa/chatgpt-next-web

You can start service behind a proxy:

docker run -d -p 3000:3000 \

-e OPENAI_API_KEY=sk-xxxx \

-e CODE=your-password \

-e PROXY_URL=http://localhost:7890 \

yidadaa/chatgpt-next-web

If your proxy needs password, use:

-e PROXY_URL="http://127.0.0.1:7890 user pass"

2.Lobe Chat

2.1 Introduction and features

This project can be regarded as the most powerful and multifunctional open-source gpt application. At first glance, Lobe Chat's user interface appears to be more complex than Next-web.More complex UI brings a greater array of powerful features, like Multi AI Providers, Multi Modals(Vision/TTS) and plugin system.

Also, it leads to the project being rather bloated with poor response speed and subpar page performance——You gain some, you lose some, right?

Multi-Model Service Provider Support

For some developers from China and Russian, Openai may refuse to provide api services for them, hence they have to use proxy provider's service. For developers who want to utilize different models from various companies within single api_url, they may use project like oneapi, so custom api_url and model service provider are helpful for them.

Therefore, the developers of Lobe Chat support multi-model service provider, in order to offer users a more diverse and rich selection of conversations.Supported Model Service Providers:

AWS Bedrock: I have to say that recently amazon take a lot in advertisement. Not only you can see its advertisements on WeChat official accounts, but one day even my business school classmate mentioned Amazon's bedrock to me.

Anthropic (Claude)

Google AI (Gemini)

ChatGLM, Moonshot, 01, Qwen

Groq

OpenRouter(Proxy API provider in western, its major mission is to converge various apis in one way? )

Local Large Language Model (LLM) Support

LobeChat also support the use of local models based on Ollama, allowing users to flexible use their own or third-party models

Model Visual Recognition

This Project now supports gpt-4-vision model with visual recognition capabilities, a multimodal intelligence that can perceive visuals. Users can easily upload or drag and drop images into the dialogue box, and the agent will be able to recognize the content of the images and engage in intelligent conversation based on this, creating smarter and more diversified chat scenarios.

TTS & STT Voice Conversation

This function does't important for me, but I suppose some users might use this to engage in conversations with theirs virtual girl/boy friends. With OpenAI's most advanced TTS(Text-to-Speech) model and STT(Speech-to-text) technologies, everyone can enjoy interaction with conversational agent as if they were talking to a real person.

Moreover, TTS offers an excellent solution of people who prefer auditory learning of desire to receive information while busy. Users can choose Openai & Microsoft Edge Speech to meet their needs. What's more, users can choose the voice that suits their personal preferences or specific scenarios, resulting in a personalized communication experience.

Text to Image Generation

You can use the latest text-to-image generation technologies like DALL-E 3, Midjourney and Stable Diffusion to draw what you want——just take conversations with the agents.

DALL-E can be directly invoked if your api has the necessary access.

Midjourney needs you to deploy reverse proxy api in your personal server.( Docker is convenient)

Stable Diffusion: I have never tried it before, but I think this way is similar to Midjourney :D

Plugin System (Function Calling)

Thanks to the function calling feature opened by OpenAI last year, you can now invoke a variety of plugins. By utilizing plugins, LobeChat assistants can obtain and process real-time information, such as searching for web information and providing users with instant and relevant news.

But I still maintain a pessimistic view that open-source community lacks the capability to outperform major corporations such as ByteDance(COZE).Their effort just can be regarded as the one of cornerstones of LLMs multi-agent agriculture.